How to Build a Product-Centric Data Science Organization

Realistically, most data science is heads-down, unpredictable activity. Typically, a data scientist is given an objective, such as “tell me the part-worth of TV in my advertising mix”, or “come up with a classifier to put a lead into the correct segment,” and has to figure out how to solve the problem with a vast array of tools and potential data sources. This might take hours or days, but the iterations and deep thought required to get to an answer are significant and differ significantly (at least in my experience) from task to task.

What am I driving at? Data science is at its core an individual voyage of discovery. Big-S science provides frameworks around which to structure this voyage of discovery— you know, the old “hypothesis / background / procedure / results / discussion” framework that we were all taught in high school chemistry. But ultimately, inside of “procedure,” there are hours and hours of arms “deep-in-the-data,” pounding away in StackOverflow that is really hard to codify.

This is a tough challenge for organizations because, in my experience, organizations don’t function well or scale relying on the “individual hero” or “craftsman” model.

To scale, organizations need to productize—that is, find common approaches, algorithms, methods, data structures—to solve common problems.

These common approaches can be configurable. Yet ultimately, a product approach needs to scale, improve itself, and be reused by others.

I’m not saying that data scientists need to abandon all creativity and individuality. I do think that truly scalable data science organizations are possible. They will ultimately make everyone, including the super smart creative data scientist, happier in their job. There will be less reinventing the wheel, less manual work, and a better understanding of value provided across the organization.

Fortunately, a lot of the infrastructure and best practices for scalability already exist. We can borrow the best parts of the software development lifecycle, specifically the Agile methodology, to evolve into a “product-centric” data science organization. I’ve had success building this kind of organization, and below are my nine best practices that work, along with some specific tools, frameworks, and processes that go along with them.

1) Implement Version Control

It still surprises me how many data science organizations don’t use version control. Whatever you’re using—Git, BitBucket, etc.—the idea that code is sitting around in C:\ drives or on some Sharepoint site or whatever, YET not tracked, is low hanging fruit. Every data scientist should not only be using version control but should have a branching strategy: Don’t commit to master! Do have a coherent naming convention! Do add commit messages that describe what you did!

2) Separate Projects from Libraries.

Data scientists should do individualistic heads-down work but, they also need to be trained to notice when their work has gone from a one-off to a reusable format, and then transition that to a library (or package). Libraries or packages have different requirements when it comes to documentation (i.e. readme files), parameterization, and general code elegance. To help understand when a “project” is turning into a “product,” code reviews are a great help.

3) Implement Reproducibility

A data science organization should avoid, at all costs, producing reports, PowerPoints, dashboards, etc. that were created by dragging, dropping, and clicking. Instead, they should invest the extra time in writing that PowerPoint programmatically. You can do this either using officeR in R, or python-pptx, or in creating that dashboard coded with a tool like Shiny. If you need a report generated periodically, build it in something like RMarkdown or knitpy. Or, just send someone to the Jupyter Notebook. This will pay dividends both when someone asks you literally “how did you get that number” or you ever want to reuse anything you just built.

4) Implement Agile Project / Product Management

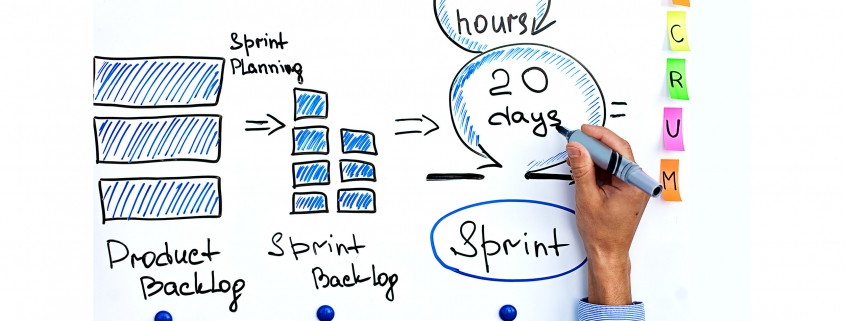

If I had to pick, this might be the most important best practice. There are many aspects to Agile, but the concept of the sprint, backlog, and strategic priorities, arrayed on some kind of a shared board, really helps data science teams work. I like a less structured tool like Trello better than a more structured tool like Jira for data science. This way, lists can evolve flexibly and sprint can be less rigorously defined. If a data science team is split up between a bunch of projects, those can be separate boards or lists, depending on how you want to roll. What matters is that everyone can see clearly what everyone else is working on along with what is up next for a longer-term picture. Writing good functional requirements on each card / ticket (as Trello calls it) is an art in and of itself. While it shares some things in common with software, writing up tasks/stories for data science has its own unique tips and tricks (out-of-scope of this blog).

5) Write Down Procedures

Duh, right? But this is often missed. Every data scientist might have their own way of doing things, and this is fine within reason, but ultimately, procedures, environments, security protocols, etc. all need to be written down. I’ve had great success leaving these as markdown readme’s on Github, but as long as there’s a single source of truth, and people know where to find it, you’re good.

6) Have Code Style Guidelines

It’s not essential, but standardizing code can help a lot in productization. For example: Are comments on separate lines or to the right of code? What is the right level of commenting? How important is it that data scientists make code Pythonic? Should we put helpers functions into one file, or split them up (and at what point)? This might be something that evolves over time, as lead data scientists develop a point-of-view on this that is evidence-based and not just based on personal preference.

7) Have Standups and Demos

Again, basic Agile stuff, but be sure to have your data science team get together in the morning to go around the horn, talk about what they’re doing today (any blockers they may have), and just generally keep on the same page. I’ve had people who push for this to be a “just the facts” meeting, and I get that, but I personally err on letting people talk. Ideas are created, people are cross-pollinated, and ultimately a few extra minutes of talking leads to non-linear gains in productivity in my opinion.

8) Have Standard Data Definitions

If you’re dealing with the same data structures over and over, don’t let every data scientist have their own way of describing the data. Using an example from the sales and marketing world, if we’re constantly looking at opportunities, take the time to define an XML (or flat) definition of an opportunity. Leave it on your version control (in the libraries section) and reuse it. Take the time to have your database or developers write an endpoint to represent it, and use it in all your code. In the long run, parameterizing your variables and making them product-ready. Important: Don’t write different data definitions for every different system. Go spend a couple hours and write an adaptor to your standard definition that can work with various systems so others are able to figure it out.

9) Enlist the Data Team.

The folks that are responsible for building your data warehouse/lake should also get in on the fun. While I find that database developers tend to do their own thing, all of the SQL code they are writing should be in the same version control system, and it’s helpful to cross-pollinate with the data scientists. A lot of times, huge light bulbs go off when the data scientists tell the database folks why they need a certain view. Conversely, data scientists who grumble about latency or speed might see the light when they hear the database engineer’s side of the story.

There are probably many more best practices I could share, but listed above are the most low-hanging fruit. At MarketBridge, we have the added layer of essentially exposing these best practices to our clients, making them a part of our product-centric data science team. It’s how we make sure that our results are actionable and reproducible. It’s also how we get prototypes from a data science-generated idea into a formalized product. That’s a topic for another time.